congressen

Full speed ahead with open science

Jeroen Bosman & Bianca Kramer

Crick Institute – October 19, London.  Some 35 researchers and 20 publishers, funders and librarians gather at the brand new magnificent Francis Crick Institute premises, between the equally magnificent St. Pancras railway station and the British Library. The Crick Institute is a partnership between the Medical Research Council, Cancer Research UK, the Wellcome Trust and three London universities – Imperial College London, King’s College London and University College London.

Some 35 researchers and 20 publishers, funders and librarians gather at the brand new magnificent Francis Crick Institute premises, between the equally magnificent St. Pancras railway station and the British Library. The Crick Institute is a partnership between the Medical Research Council, Cancer Research UK, the Wellcome Trust and three London universities – Imperial College London, King’s College London and University College London.

Open Research London – The meeting is organised by Open Research London (@OpenResLDN), and is an irregularly returning event originally started by Ross Mounce, John Tennant and Torsten Reimer and now coordinated by Frank Norman from the Crick Institute. Open Research London (ORL) is an informal group formed to promote the idea of sharing and collaboration of research. This specific ORL meeting focused on Open Science tools and workflows. There were presentations on bioRxiv and Wellcome Open Research and we moderated a 1-hour mini workshop.

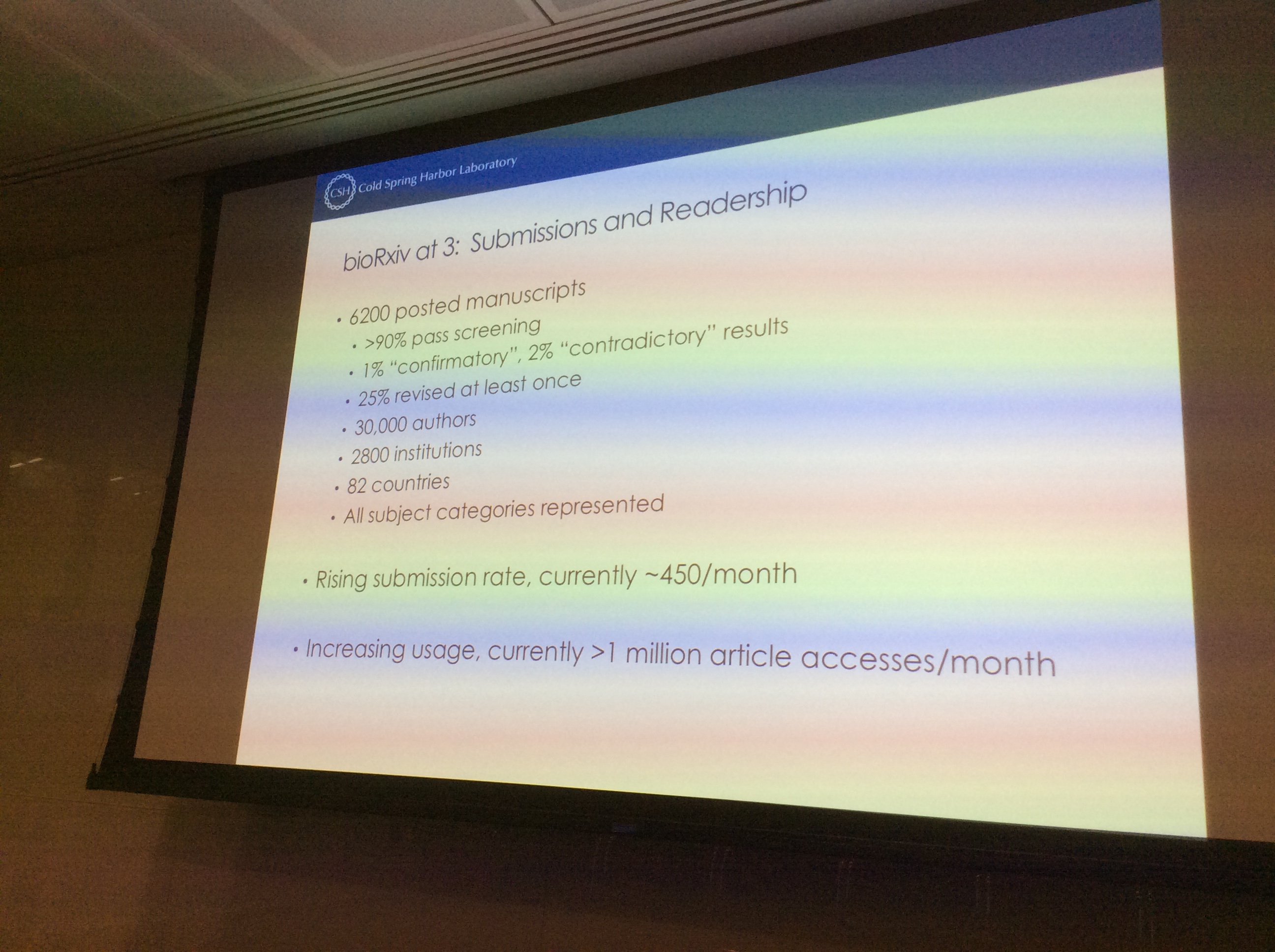

BioRxiv – John Inglis, executive director of Cold Spring Harbor Laboratory Press and co-founder of bioRxiv, told us how this pre-print archive, launched in November 2013, was modeled on arXiv, the preprint platform that over the last 2 decades gained a dominant position in sharing of papers in physics, astronomy and mathematics. Cold Spring Harbor Laboratory Press manages bioRxiv which is directed at the broad spectrum of life sciences, from computational biology to paleontology. So far, over 6200 papers have been accepted from over 30,000 authors, with and current submission levels at ~450/month.

BiorXiv has rejected less than 10% of the submissions, e.g. because they were off topic or contained plagiarism. First indications are that some 60% of the papers shared through bioRxiv is published within two years. The initial success of bioRxiv is at least partially ascribed to the high level support, with for instance Anurag Acharya (Google Scholar) and Paul Ginsparg (arXiv) on the advisory board. BiorXiv now seems out of the experimental phase as it is accepted by researchers and many journals. Still though, it is working on further improvements, together with HighWire that hosts the archive. Also, they are trying to find solutions for some issues such as what to do with the preprint when the related journal article publication is retracted.

Wellcome Open Research – Equally interesting is the experimental online journal Wellcome Open Research presented on by Robert Kiley (Head of Digital Services at the Wellcome Library). While its setup with fast publishing and post-pub peer review and focus on sharing all kinds of research outputs is already innovative, the real experiment lies in ownership and author selection. This journal is a funder’s journal (anyone know of other examples?) and submissions are restricted to papers, datasets etc. with at least one Wellcome-funded (co-)author. This journal, hosted and operated by F1000, will be fully open with expected (but internally paid) APC’s of between 150 and 900 US$.

Open Science workflow – We were granted the second half of the evening and led the group in a pursuit of Open Science workflows. For that we built on the insights and material developed in the 101 innovations in scholarly communication project. After a short introduction on the concept of workflows, illustrated by some hypothetical workflow examples, the participants got into action.

Open Science workflow – We were granted the second half of the evening and led the group in a pursuit of Open Science workflows. For that we built on the insights and material developed in the 101 innovations in scholarly communication project. After a short introduction on the concept of workflows, illustrated by some hypothetical workflow examples, the participants got into action.

First, in pairs, they briefly discussed the function and use of specific scholarly communication tools. These had been given to them as small button-like plastic circles. Next, people had to match ‘their’ tool to one of the 120+ cards with research practices that hang from the wall, organized by research activity. Of course people could create additional cards and circles with practices and tools.

Then came the most exciting part: we jointly composed an open science workflow by taking cards with research practices that should be part of such a workflow from the wall and hanging them together on an empty canvas with 7 research phases. In what little time remained after that we annotated some parts of the resulting workflow with suns and clouds, indicating specific enabling and constraining factors. The resulting workflow consisted of 69 practices of which 31 were supported by some 50 tools.

Then came the most exciting part: we jointly composed an open science workflow by taking cards with research practices that should be part of such a workflow from the wall and hanging them together on an empty canvas with 7 research phases. In what little time remained after that we annotated some parts of the resulting workflow with suns and clouds, indicating specific enabling and constraining factors. The resulting workflow consisted of 69 practices of which 31 were supported by some 50 tools.

The whole process was actually less chaotic and messy than expected, though it would have been good to discuss the resulting workflow more in depth. Is it not too crowded? Are all practices relevant for every researcher? Why were some practices included and others left out? Which tools work well together and which don’t? And what about the practices that have no supporting tool attached to them: was that caused simply by lack of time during this session?

Though there was indeed not enough time to dive into those questions, the participants seemed quite interested to see the final result and keen to work on this together. And if we left any pieces of tape etc. on the wall, we sincerely apologize to Crick’s 😉

For us, the meeting brought a very good end to three intensive days that also included a workshop and presentation for librarians at Internet Librarian International on research support by libraries. And one of the great pleasures was meeting @DVDGC13!

Fun read, and good to hear that your workshop format worked so well!

“69 practices of which 31 were supported by some 50 tools”. Does this mean that in general several tools were used per practice?

Yes, though distributed unevenly. And this was limited because of the group size.