Blog

Nine reasons why Impact Factors fail and using them may harm science

N.B. This post contains updates between []

Over the past few months I have come across many articles and posts highlighting the detrimental effect of the enormous importance scholars attach to Impact Factors. I feel many PhDs and other researchers want to break out of the IF rat race, but obviously without risking their careers. It is good to see that things are changing, slowly but surely. It seems a good moment to succinctly sum up what is actually wrong with Impact Factors.

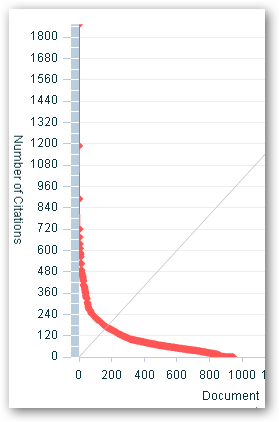

1) Using Impact Factors to judge or show the quality of individual papers or the authors of those papers is a clear ecological fallacy. In bibliometrics normal distributions are very rare and consequently mean values as the IF are weak descriptors. This certainly holds for citation distributions of articles published in a journal. They are often very skewed with a small number of very highly cited articles. The citation distributions of high IF journals are probably even more skewed.

A typical journal citation distribution: Citations in 2011-2013 to Nature articles published in 2010 (made 20131103 from Scopus data)

You simply cannot say that this or that paper is better because it has been published in a high IF journal. For decades now the producers of the impact factors and all bibliometricians have warned against using journal averages for judging single papers or authors. In the Journal Citation Reports it reads “You should not depend solely on citation data in your journal evaluations. Citation data are not meant to replace informed peer review. Careful attention should be paid to the many conditions that can influence citation rates such as language, journal history and format, publication schedule, and subject specialty.“ Judging papers by their IF is actually judging the ability of papers and authors to get accepted by editors and to pass peer review. That ability is not all there is to say about the quality or importance of a paper and it is simply to small a basis for decisions on tenure, promotion, grants etc.. Recently there has been evidence that with the introduction of online journals and search engines the correlation between IF and a paper’s citations is weakening [id. at ArXiv].

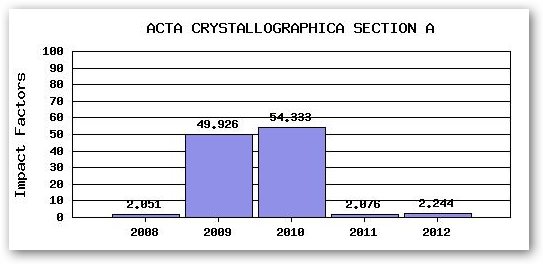

[Update 20131106: How outliers may influence impact factors is examplified by the extreme fluctuations of the IF of Acta Crystallographica A over the recent years. The article “A short history of SHELX” was cited 10,ooos of times (perhaps also becuase it explicitly asks to do so in the abstract), thereby skyrocketing the journals IF. Authors of average or mediocre papers in the 2009 and 2010 volumes must have been pleasantly surprised to be able to mention IFs of 49 and 54! In less extreme forms this happens with many journals.]2) The use of impact factors creates and sustains a double Matthew effect. The disproportial attention for high IF journals makes that the same paper accepted by such a journal will get far more eyeballs and will even receive more citations than it would have received in other journals. Related to this is the effect that authors and asessors deem a paper better just because it was published in a journal with high IF or rejection rates and may think that citing a paper from high IF journals is the safe way to go to avoid comments from referees. Thus, part of the citations these papers receive are a free ride based on the visibility and citability of the well known journal brands in which they are published.

3) Impact Factors calculated by Thomson Reuters (formerly ISI) are only available for a minority of all journals. Only 10,853 of the approximately 30,000 scholarly journals are included in the journal citation reports. Arts and humanities are left out as are the majority of non-English journals. It is very difficult for a journal to join the club. That might be acceptable if it was just based on stringent quality control. But inclusion in the Journal Citation Reports in which Impact Factors are published is also based on the number of times they are cited in journals that are already included. But meeting that criterion is adversely affected by the fact that authors like to cite papers from (high) IF journals. As long as your journal is out it is difficult to get in. By only taking papers seriously if they are published in journals with an Impact Factor we hinder the free development of science.

Related to this is the US/UK bias of inclusion. Historically ISI/Thomson built the Science Citation Index on US learned society journals, followed by UK journals and later also the vast number of English languages journals from commercial publishers, also including Elsevier from the Netherlands and Springer from Germany. But most journals from other areas of the world were left out. In ISI/Thomson terminology, the English language and mostly US/UK based publications are “international journals” while all journals from the rest of the world are termed “regional journals’.

To be fair, it is good to see that recently Thomson has started to internationalize the journal base of their citation indexes, presumably due to competition by the rival Scopus that indexes vastly more journals (21,500). We now also have freely available Scopus-based metrics such as the SJR (status normalized, by SCImago) and the SNIP (field normalized, by CWTS). Although it is nice to have these alternatives for so many more journals, they share most of the issues described here surrounding the Impact Factors.

Of course, giving too much weight to Impact Factors gives an even more limited view of real relevance, importance or promise in fields were large shares of the output are not in journals at all, but in books, reports, code, software and more. For example, at Utrecht University in medicine output in term of numbers of items consisted for 96% of refereed journal articles, but in social science it is only 77% and in the humanities 32%.

4) Impact Factors are irreproducible and not transparent. It is not possible, using the Web of Science data, to reproduce impact factors. What often is also not realized is that there is a difference between Impact Factor levels and average citation levels. That has to do with publication types. In the numerator of the Impact Factor citations to all publications types are counted, but in the denominator only articles and reviews are counted. That means that journals publishing lots of content other than articles and reviews, such as letters, notes etc. receive a free boost to their Impact Factors.

[Update 20131106: General science journals such as Nature and Science typically profit substantially from these free rides, because they publish a lot of material (letters etc.) that gathers citations for their numerator, but that is not counted in their IF denominator] [Update 20131105: The lack of transparancy is clearly demonstrated by changing numbers of articles taken into account for the same journal in the same year in different JCR editions, as shown by Björn Brembs and by the negotiability and unclear process of assigning document types by Thomson, as shown in this PLoS Medicine editorial.]5) Related to the previous point, Impact Factors are affected by the mix of publication types in a journal. In particular, the share of review articles positively affects the IF as these articles have very high citation rates. Even when publishing a regular primary research paper in a journal with many review articles, you benefit in a world were Impact Factors are seen as a proxy for your paper’s quality or importance.

6) (Coercive) journal self citations are one of the more perverse effects of overly relying on Impact Factors. Journals want to compete and attract attention and citations and these are at least partially based on their IFs. Some journal editors bluntly ask their authors to cite content from the journal they publish in. If very gross and detected, Thomson Reuters will punish the journals by temporarily removing them from the Journal Citation Reports, but one wonders what goes undetected. In the 2012 edition no fewer than 65 journals were suppressed because of these kinds of ‘anomalies’.

Another case is that of editorial self citations. These may in some cases account for over 20% of the Impact Factor.

7) There is a correlation, but no clear cut relation between citation numbers and paper quality. All citation metrics are biased by other factors. [Update 20131210: there is even no hard evidence for a correlation, also becuase of the vagueness of the ‘quality’ concept]. The most important other factor is perhaps visibility. For a paper to get cited it first of all needs to be encountered by potential citing authors. The chance of encounters depends on:

- findability (being indexed in WoS,. Scopus and Google Scholar a.o.)

- attractiveness of the title (e.g. titles mentioning main finding/result)

- language

- whether the journal is licensed (e.g. as part of a library big deal with main publishers)

- whether the journal has Open Access

- whether the journal has many personal subscriptions

- whether the paper has already been cited (mark that the results ranking in Google Scholar takes the number of citations received into account)

- promotion through social media and researcher profiling cites etc.

All these visibility factors play their role in citation chance and thus in the Impact Factor. They all have nothing to do with paper quality or importance.

There are more distortive factors that render the perception that IFs reflect journal quality untenable. We need to menion citation sentiment. Papers may be cited for lots of reasons and not all citations are endorsements.

8) Impact Factors do not facilitate cross-discipline comparison and indirectly may make interdisciplinary work less attractive [id. at ArXiv]. Publication and citation cultures are very diverse across disciplines. The average number of citations per paper and the distributions of citations over time make that average impact factors of fields vary enormously. While the two year citation window used in the standard Impact Factor may capture the peak or bulk of citations to papers in molecular biology, it certainly does not in mathematics or geology. Using a five year window solves that to a certain extent, but the problem of different average numbers of references per paper in the various fields remains. When researchers use IF in their selection of journals to submit their paper to, it may even prevent them from doing interdisciplinary work with field having lower IFs, the more so because interdisciplinary journals are often younger and still building up their reputation. Using SNIP or Eigenfactor metrics, both field normalized, may redress this particular problem, but not most of the other issues.

9) Impact factors have a long delay. Impacts Factors reflect citation to papers published in print between 1.5 and 4.5 years ago (as the Journal Citation report are published in June and reflect citation made in the previous year to papers published in the two years preceding that year.. The papers themselves may even be much older, because of the time lag between online and print publication and the time that passes between finishing the manuscript and publication. By the way: long time lags between online and print publication distort citation figures. Papers from journals with these long gaps have more time to gather citations. The problem with this delay is that it tells us next to nothing about the attractiveness of more recent papers. Article level metrics such as downloads and take up by other media reflected in Altmetrics do have this capability, but have their own problems of interpretation.

This list of issues shows that although there are some inherent problems in the Impact Factor, most problems are caused by using it to support decisions that it is not intended to support and by the perverse effects.

IFs were made for librarians to support journal subscription decisions [Update 20131106: Actually the first intended use was for ISI itself: to now which journals should be added to the Journal Scietation Index]. Citation indexes were made to discover related literature, follow discussions or trace new research topics. Not even in his wildest dreams did Eugene Garfield envision researchers quoting IFs on their homepages or in tenure, promotion and grant applications or over a glass of beer or wine in a bar. By the way, even for librarians Impact Factors are becoming less useful, because journals are more and more selected by package/publisher (whatever you may think of that), because many journals lack an Impact Factor, and because many journals are now Open Access. Speaking of librarians: they play an important role as they are often involved in information literacy courses teaching on how to select quality publications and how to evaluate resources. I truly hope they will help lead us away from Impact Factor madness and focus on teaching skills to evaluate content in stead of status and paint the information landscape beyond IF journals.

All this is not new. But in the last decade the focus on Impact Factors and their perverse effects has reached such a degree as to hinder free development of science, causing frustration and costing time and money. But things may change. The Research Excellence Framework (REF) in the UK has already stated that using rankings or metric in evaluation is no longer allowed and the Australian ERA may do the same. Many societies and thousands of scholars have signed the San Francisco declaration on research assessment (DORA), that states: “Do not use journal-based metrics, such as Journal Impact Factors, as a surrogate measure of the quality of individual research articles, to assess an individual scientist’s contributions, or in hiring, promotion, or funding decisions”. There is increasing evidence of adverse effects of reliance on rankings, but there are no easy solutions. New movements of scholars advocating fundamental reorientation, such as Science in Transition in the Netherlands, are gaining momentum. The intention is not to throw away all that we have but to reorient our tools and habits to make science even more valuable.

Jeroen Bosman, @jeroenbosman

Hear! Hear!