I&M2.0

NWO – the impact factor paradox

[January 11, 2017: update added at the end of the post]While there are many reasons not to use the journal impact factor (IF) for the assessment of individual research articles, researchers or research groups, its use for this purpose is still widespread, particularly in the sciences. Members of all parties involved (researchers, research institutions and funders) proclaim to want to move away from using IF for assessment purposes (see DORA signatories, which include the VSNU). However, in practice they often keep each other hostage to a system where publishing in ‘high-impact journals’ is in itself considered a mark of excellence*. To break free from this pattern, someone has to make the first move.

In the Netherlands, government funding agency NWO can be seen as holding a key position in this regard. As long as researchers expect NWO to use IF as an important assessment criterium, the majority of them will play along with that, for fear of diminishing their funding chances. So what, exactly, does NWO ask for in this respect?

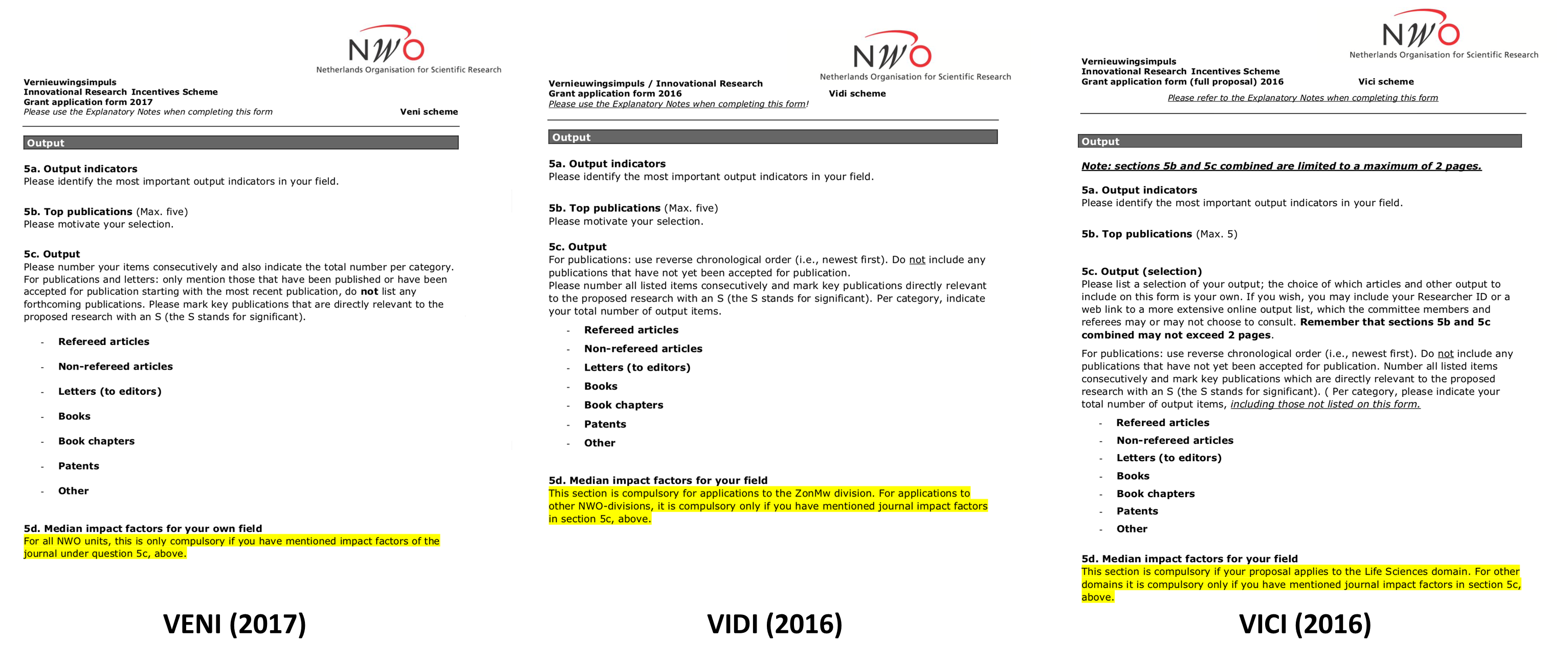

Somewhat surprisingly, three of the main NWO-funding programmes, the VENI, VIDI and VICI-schemes, do not specifically ask applicants to list IFs for their published papers, though all three of them leave this as an option. The VIDI and VICI programmes do require listing median IF for the applicant’s field, but only for applications from Medical Sciences (VIDI) and the more broad domain of Life Sciences (VICI). Inclusion of this metric in other disciplines is voluntary, but required if applicants decide to include IFs for individual publications (Figure 1).

As for other NWO funding programmes, there is at least one (Rubicon) for which inclusion of IFs for applicant’s journal articles is compulsory (in the 2016 round), but also many others for which it isn’t. Applications for these programmes generally ask for a list of selected publications, but do not specifically mention IF.

Figure 1. Mention of impact factors (highlighted in yellow) in the most recent NWO-application forms for VENI, VIDI and VICI grants

The question then is: do applicants decide to list IFs anyway because they think it is to their benefit or even expected, also when it is not compulsory? And the other side of the coin: do referees and members of selection committees take journal status into account even when IFs are not required in the application?

It would be interesting to survey prior applicants to gain insight into the first question. As to the second, there are indications that the assumptions made here are true in at least some cases. In 2014-2015, Volkskrant journalist Martijn van Calmthout was allowed to follow the assessment of applications for the VIDI programme for Earth and Life Sciences (at that time, including IFs in the application was also not compulsory). He was allowed access to the normally confidential deliberations of the selection committee. In the resulting newspaper article, van Calmthout reports remarks on the weight of publication lists and the value of publications in specific ‘high-ranking’ journals. Committee members also commented on a candidate’s H-index, even though that, too, was not a metric requested in the application.

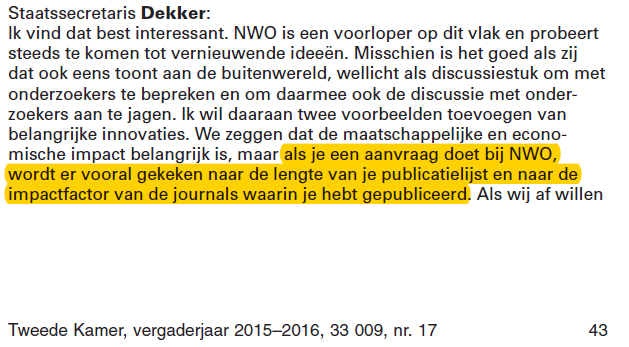

Even though the extent to which IFs weigh into NWO’s assessement of candidates is unknown (both for programmes that require listing of IFs and those that don’t), perception that it is an important criterium is certainly alive and well. One example of this is the remark of Junior Minister Sander Dekker in a parliamentary meeting on national research policy: “(…) if you apply for NWO-funding, you are mainly judged on the length of your publication list and the impact factor of the journals you published in” (Figure 2).

Figure 2. Remark of Junior Minister Dekker in parliamentary meeting on national research policy (AO Wetenschapsbeleid, April 20 2016)

In the same meeting, Sander Dekker mentioned that internally, NWO is discussing novel ideas for research assessment. He committed to asking the funding agency to share these discussions with the academic community (AO Wetenschapsbeleid April 20 2016, p.43-44). One of the options apparently discussed was the suggestion of Spinoza-laureate Marten Scheffer of Wageningen University & Research for a model where researchers receive baseline non-competitive funding, with the stipulation that they distribute part of that funding among other researchers (for more information on this idea, see e.g. Volkskrant May 22, 2016 -in Dutch).

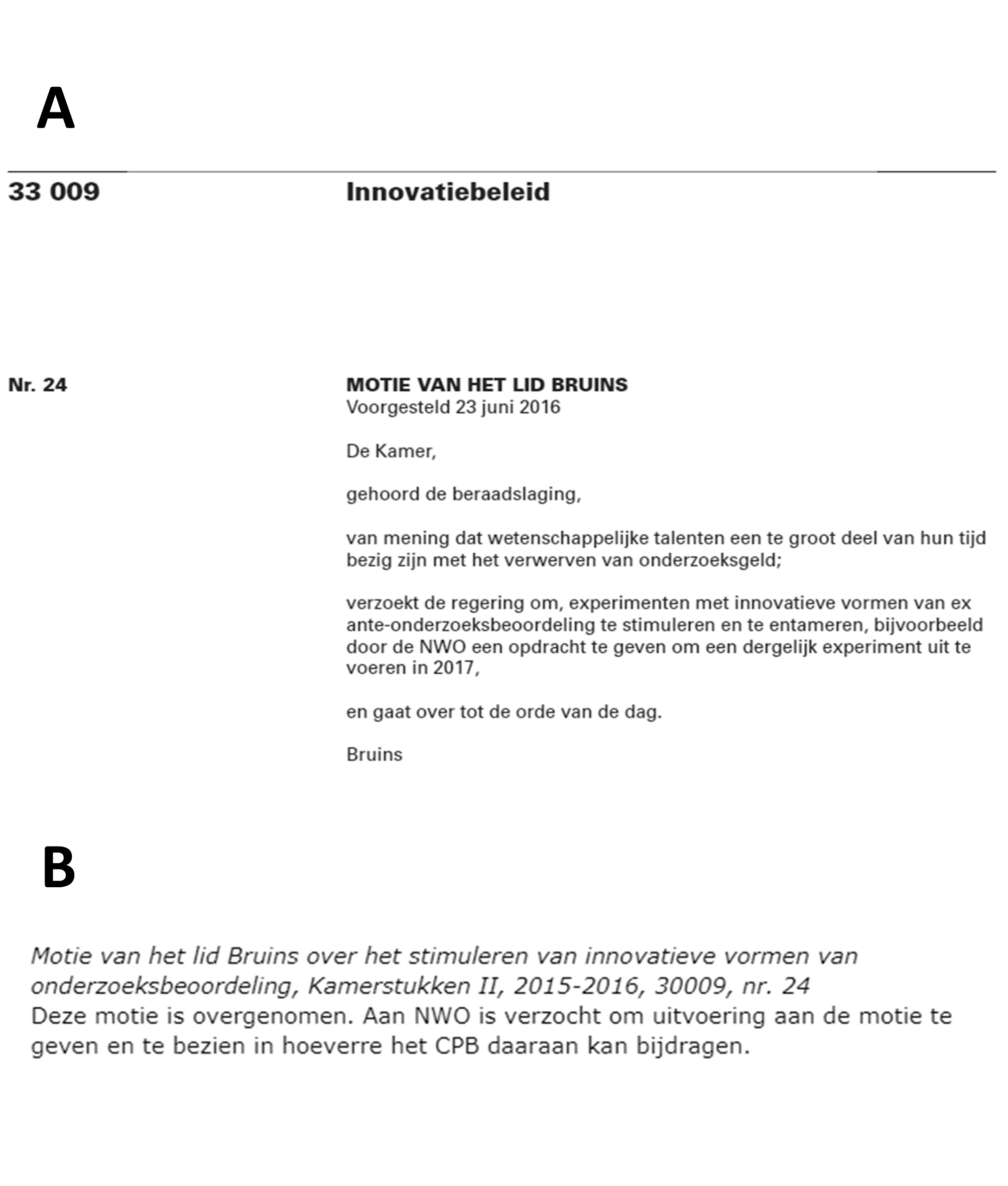

In a plenary parliamentary debate on May 23, 2016, MP Eppo Bruins proposed a vote to experiment with innovative methods for distribution of research funding (or, in other words, ex ante research assessment) (Figure 3A) The motion was accepted by Sander Dekker, and thus did not need to be voted on by parliament. On September 23, Minister Jet Bussemaker made it known that following the motion, NWO was asked to take action on this idea, if possible in collaboration with the Netherlands Bureau for Economic Policy Analysis (CPB) (Figure 3B) .

Figure 3. Parliamentary motion by MP Eppo Bruins, June 23, 2016 (A); follow-up by Minister Bussemaker in letter to parliament, September 23, 2016 (B)

As of January 2017, it is unclear what the status of these experiments is. It would be welcomed if NWO publicly shared any concrete plans it has in this regard and, more broadly, its thoughts and ideas around good criteria for research assessment, as Sander Dekker promised to request of the funding agency.

Changes in funding instruments are expected following the recent reorganization of NWO, with the aim of harmonizing funding instruments across domains. This looks like a good opportunity to implement and clearly communicate a vision on indicators for research assessment, signalling a move away from IF and stimulating applicants to focus on other ways of demonstrating research impact.

And will we then see NWO sign DORA, too?

[update 2017011: On January 10, NWO announced a ‘national working conference‘ on ex ante research assessment. This conference will take place on April 4, 2017 in Amsterdam. Applications to attend can be made by expressing interest through this form, after which a selection will be made. Though NWO stresses knowledge of existing procedures as a condition to apply, I sincerely hope they will include people who have experiences and ideas *outside* current procedures as well, as this will increase both innovative thinking and broader support.

Following the national conference, NWO will also organize an international conference on this theme, aimed at European research councils and other granting organizations. In this regard, it would be interesting to look at the recently formed Open Research Funders Group, a US-based initiative launched in collaboration with SPARC (Scholarly Publishing and Academic Resources Coalition).]

*For a good critique on the concept of excellence in research culture see Excellence R Us, an article by Samuel Moore, Cameron Neylon, Martin Paul Eve, Daniel O’Donnell and Damian Patterson, shared on Figshare.

Dag Bianca, Dank voor deze heldere samenvatting van de stand van zaken!

Groet, roos